There is a Fragment of Paper “Experimental researches of efficiency of Kohonen maps for pattern visualization” published in 2008 in Scientific and Technical Journal “Bionics Intellect”. Vol. 1(68).–2008. P.143-148 by Victoria Repka, Аndrey Sherstnyuk, Оlga Ivchenko, Natalya Lesna.

See on my Goggle Group:

http://groups.google.com/group/KhNUREstudents/web/Kohonen+Self.doc

Data set “Spirals” still was chosen for describing Kohonen map visualization because of its obviousness and simplicity result representation.

Ways of visualization by means of the Unified matrix of distances and visualization by the Hinton diagram are applied.

These experimental researches were spent for an estimation of visualization quality, by means of a distances matrix. It has shown that more effective is the map development of the greater size, than the addition of cells for distance mapping between neurons at coloring maps.

The received results have allowed making the conclusion that at increase in the size of a topological map, free cells are filled inactive neurons. They actually fill the cells visualizing distance at the same time as well as active neurons do it, depending on the map size and distribution of images over the nodes.

Later the results for multidimensional data set will present for sample about clients has been divided by a network into 4 groups and displayed by means of the Hinton diagram.

Welcome to the Artificial Life!

Monday 2 March 2009

Fragment of Paper “Experimental researches of efficiency of Kohonen maps for pattern visualization”

Sunday 1 March 2009

Friday 20 February 2009

Several Examples of visualisation by Kohonen's SOM

The result of visualization for classical sample (2 spirals) by Kohonen's Self Organizing Map is presented.

The task is to learn to discriminate between two sets of training points which lie on two distinct spirals in the x-y plane. These spirals coil three times around the origin and around one another. This appears to be a very difficult task for back-propagation networks and their relatives.

Problems like this one, whose inputs are points on the 2-D plane, are interesting because we can display the 2-D "receptive field" of any unit in the network.

The task is to train on the 194 I/O pairs until the learning system can produce the correct output for all of the inputs.

SOM with such parameters:

size map - 30, Neighbor function - Cone, Radius - 10, Epochs - 250

SOM with the parameters:

size map - 30, Neighbor function - Cos, Radius - 5, Epochs - 100

Thursday 19 February 2009

Short Lecture about Perceptron and Kohonen's SOM

It is my short lecture in classical neural networks. It was delivered to students from School of Mathematics and Systems Engineering, Vaxjo University in September, 2008.

Outline:

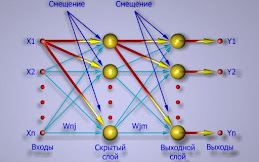

- Supervised Learning

- Perceptrons: Multilayer Perceptron

- Back-Propagation Training Algorithm

- Self-organizing map

- Data visualization techniques using the SOM

Fifth International Workshop on Artificial Neural Networks and Intelligent Information Processing

I can recommend to visit this Workshop.

http://www.icinco.org/ANNIIP.htm